AI is changing the world faster than we ever imagined. It helps us work smarter, connect more easily, and solve problems quickly. But while we often talk about its benefits, there's a growing issue that stays out of the spotlight—AI's environmental impact. Behind every smart device and powerful AI tool is a system that consumes enormous amounts of energy.

This energy use leads to a rising carbon footprint that threatens our planet's health. As AI becomes part of everyday life, it's time we ask an important question: How can we balance technological progress with caring for the environment?

AI systems have become the engine behind many of the smart tools we use today. But powering this intelligence comes at a hidden environmental cost. Large AI models, like ChatGPT, depend on enormous amounts of data and computing power to function properly. Training these models isn’t quick or easy — it can take weeks or even months of nonstop processing on powerful computer servers. These servers are housed in large data centers, which require electricity not only to run but also to stay cool. Without constant cooling systems, these machines could overheat and fail.

The energy needed to train a single advanced AI model can produce as much carbon dioxide as five cars would generate in their entire lifetime. And the energy use doesn't stop after training. Every time we use AI—from asking a chatbot a question to receiving personalized recommendations online—more electricity is consumed.

Industries like healthcare, finance, and online retail depend heavily on real-time AI processing. To meet growing demand, companies operate huge server farms across the globe, many of which still rely on fossil fuels. While some progress has been made in using renewable energy, AI's environmental impact, especially its growing carbon footprint, remains a serious global challenge.

Data centers are at the heart of AI operations. These facilities house thousands of servers responsible for data storage and processing. Their electricity consumption is staggering, and unless powered by renewable energy, they contribute heavily to greenhouse gas emissions.

Most data centers are powered by a mix of energy sources, including fossil fuels like coal and natural gas. These sources are major contributors to carbon emissions. Although some technology companies have started investing in renewable energy to power their data centers, the transition is slow and expensive.

In addition to energy consumption, data centers require vast amounts of water for cooling systems, adding another layer to AI's environmental impact. The growing demand for AI services means that the number of data centers is increasing worldwide, raising questions about the sustainability of this expansion.

The carbon footprint of data centers also varies depending on their location. Data centers in regions that rely on coal or gas for electricity generation naturally have a larger carbon footprint compared to those in areas using hydropower or solar energy.

AI development is not limited to data centers alone. The process of designing, testing, and deploying AI models also adds to the carbon footprint. Research teams often run multiple experiments to fine-tune their models, each of which consumes energy.

Moreover, machine learning requires access to large datasets stored on servers. Gathering, cleaning, and maintaining these datasets requires additional computational resources. The environmental cost of AI development rises even more when companies compete to build the largest and most powerful models. Bigger models need more data and computing power and ultimately produce a higher carbon footprint.

AI-powered technologies used in consumer devices like smartphones, smart speakers, and home assistants also leave an environmental trace. Every search query, voice command, or recommendation processed by AI uses energy stored in the cloud. As these devices become more common, the cumulative carbon footprint grows.

The growing awareness of AI's environmental impact has sparked debates about responsible AI development. Many experts believe that future AI models should be evaluated not only based on their accuracy or speed but also their energy efficiency.

Reducing AI’s environmental impact requires a combination of technological innovation, policy changes, and corporate responsibility. One of the most promising solutions is to use renewable energy to power data centers. Tech giants like Google, Microsoft, and Amazon have already pledged to move toward 100% renewable energy for their operations. However, smaller companies may struggle with the cost and availability of renewable energy sources.

Improving the energy efficiency of AI models is another critical step. Researchers are exploring new algorithms that require less computing power without sacrificing performance. Techniques like model compression and knowledge distillation are being used to reduce the size and energy needs of AI systems.

Data center design is also evolving to support sustainability. Some facilities are being built in cooler climates to reduce the need for air conditioning, and others use advanced cooling technologies, like liquid cooling systems, that are more energy-efficient than traditional air conditioning.

Regulatory bodies and governments also have a role to play. Setting guidelines for the energy consumption of AI models and encouraging investment in green technologies can accelerate progress toward sustainable AI.

Lastly, raising public awareness about AI's carbon footprint is essential. Users should understand that every online search or voice command has an environmental cost. Encouraging responsible usage can help reduce unnecessary energy consumption.

AI's environmental impact is a growing concern that demands attention. While AI technology brings convenience and innovation, it also leaves behind a significant carbon footprint due to high energy consumption. The future of AI must focus on sustainability by using renewable energy, improving model efficiency, and adopting greener practices in data centers. Companies, researchers, and users all have a role in reducing AI's environmental costs. By making conscious choices today, we can ensure that AI continues to improve our lives without causing long-term harm to the environment.

How AI APIs from Google Cloud AI, IBM Watson, and OpenAI are helping businesses build smart applications, automate tasks, and improve customer experiences

JFrog launches JFrog ML through the combination of Hugging Face and Nvidia, creating a revolutionary MLOps platform for unifying AI development with DevSecOps practices to secure and scale machine learning delivery.

YouTube channels to learn SQL, The Net Ninja, The SQL Guy

Drive more traffic with ChatGPT's backend keyword strategies by uncovering long-tail opportunities, enhancing content structure, and boosting search intent alignment for sustainable organic growth

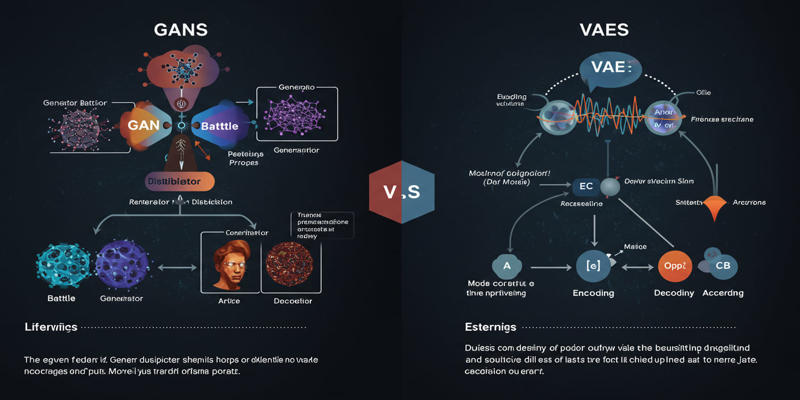

Study the key distinctions that exist between GANs and VAEs, which represent two main generative AI models.

Explore Skimpy, a fast and readable tool that outperforms Pandas describe() in summarizing all data types in Python.

Want to run AI without the cloud? Learn how to run LLM models locally with Ollama—an easy, fast, and private solution for deploying language models directly on your machine

AI and the Metaverse are shaping the future of online communication by making virtual interactions smarter, more personal, and highly engaging across digital spaces

Discover these 7 AI powered grammar checkers that can help you avoid unnecessary mistakes in your writing.

Master how to use DALL-E 3 API for image generation with this detailed guide. Learn how to set up, prompt, and integrate OpenAI’s DALL-E 3 into your creative projects

Discover how autonomous robots can boost enterprise efficiency through logistics, automation, and smart workplace solutions

Build a simple LLM translation app using LangChain, LCEL, and GPT-4 with step-by-step guidance and API deployment.